Troubles with the Infinite

Real analysis is born out of our desire to understand infinite processes, and to overcome the difficulties raised by taking infinity seriously in this way. To appreciate this, we begin with an overview of some famous results from antiquity, as well as several paradoxes that arise from taking them seriously, if we are not careful.

The Diagonal of a Square

Around 3700 years ago, a babylonian student was assigned a homework problem, and their work (in clay) fortuitously survived until the modern day.

The problem involved measuring the length of the diagonal of a square of side length

Definition 1 (Base Systems for Numerals) If

Numbers between

The babylonians used base

Which, in base 60 denotes

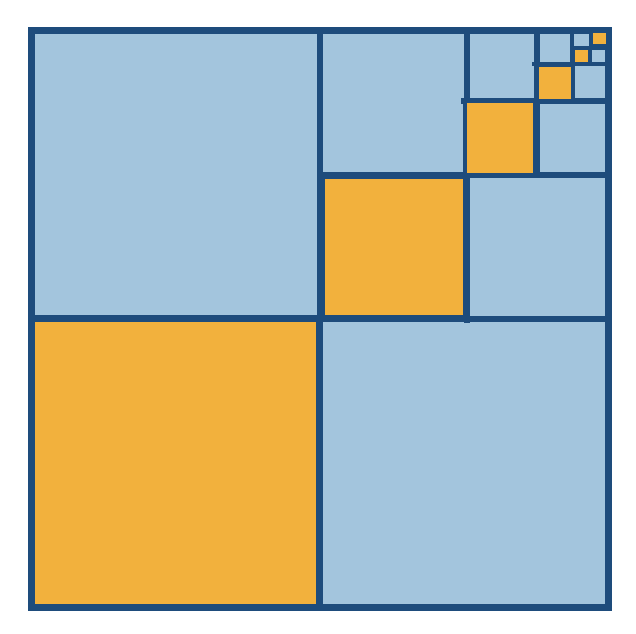

Exercise 1 By inscribing a regular hexagon in a circle, the Babylonians approximated

The tablet itself does not record how the babylonians came up with so accurate an approximation, but we have been able to reconstruct their reasoning in modern times

Example 1 (Babylonian Algorithm Computing

Starting from a rectangle with side lengths 1 and 2, applying this procedure once improves our estimate from

Exercise 2 (Babylonian Algorithm Computing

Exercise 3 (Computing Cube Roots) Can you modify the babylonians procedure which found approximates of

Here, instead of starting with a rectangle of sides

Start with a simple rectangular prism of volume

It is clear from other Babylonian writings that they knew this was merely an approximation, but it took over a thousand years before we had more clarity on the nature of

Pythagoras

We often remember the Pythagoreans for the theorem bearing their name. But while they did prove this, the result (likely without proof) was known for millennia before them. The truly new, and shocking contribution to mathematics was the discovery that there must be numbers beyond the rationals, if we wish to do geometry.

Theorem 1 (

To give a proof of this fact we need one elementary result of number theory, known as Euclid’s Lemma (which says that if a prime

Proof. (Sketch) Assume

Thus, we can write

But now we’ve found that both

Exercise 4 Following analogous logic, prove that

Knowing now that

Definition 2 (The Babylonian Algorithm and Number Theory) Because

Exercise 5 (The Babylonian Algorithm and Number Theory) Prove that all approximations produced by the babylonian sequence starting from the rectangle with sides

To acomodate this discovery, the Greeks had to add a new number to their number system - in fact, after really absorbing the argument, they needed to add many. Things like

Quadrature of the Parabola

The idea to compute some seemingly unreachable quantity by a succession of better and better approximations may have begun in babylon, but truly blossomed in the hands of Archimedes.

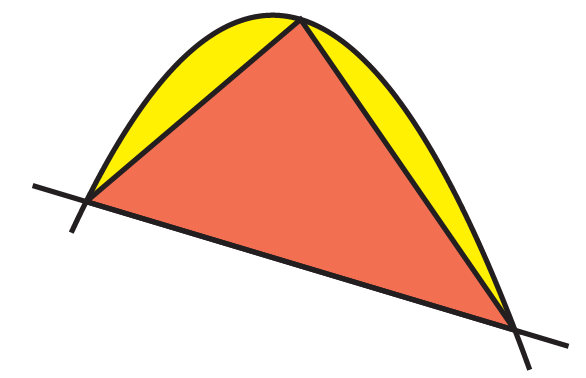

In his book The Quadrature of the Parabola, Archimedes relates the area of a parabolic segment to the area of the largest triangle that can be inscribed within.

Theorem 2 The area of the segment bounded by a parabola and a chord is

After first describing how to find the largest inscribed triangle (using a calculation of the tangent lines to a parabola), Archimedes notes that this triangle divides the remaining region into two more parabolic regions. And, he could fill these with their largest triangles as well!

These two triangles then divide the remaining region of the parabola into four new parabolic regions, each of which has their own largest triangle, and so on.

Archimedes proves that in the limit, after doing this infinitely many times, the triangles completely fill the parabolic segment, with zero area left over. Thus, the only task remaining is to add up the area of these infinitely many triangles. And here, he discoveries an interesting pattern.

We will call the first triangle in the construction stage 0 of the process. Then the two triangles we make next comprise stage 1, the ensuing four triangles stage 2, and the next eight stage 3.

Proposition 1 (Area of the

If

And the total area

Now Archimedes only has to sum this series. For us moderns this is no trouble: we recognize this immediately as a geometric series

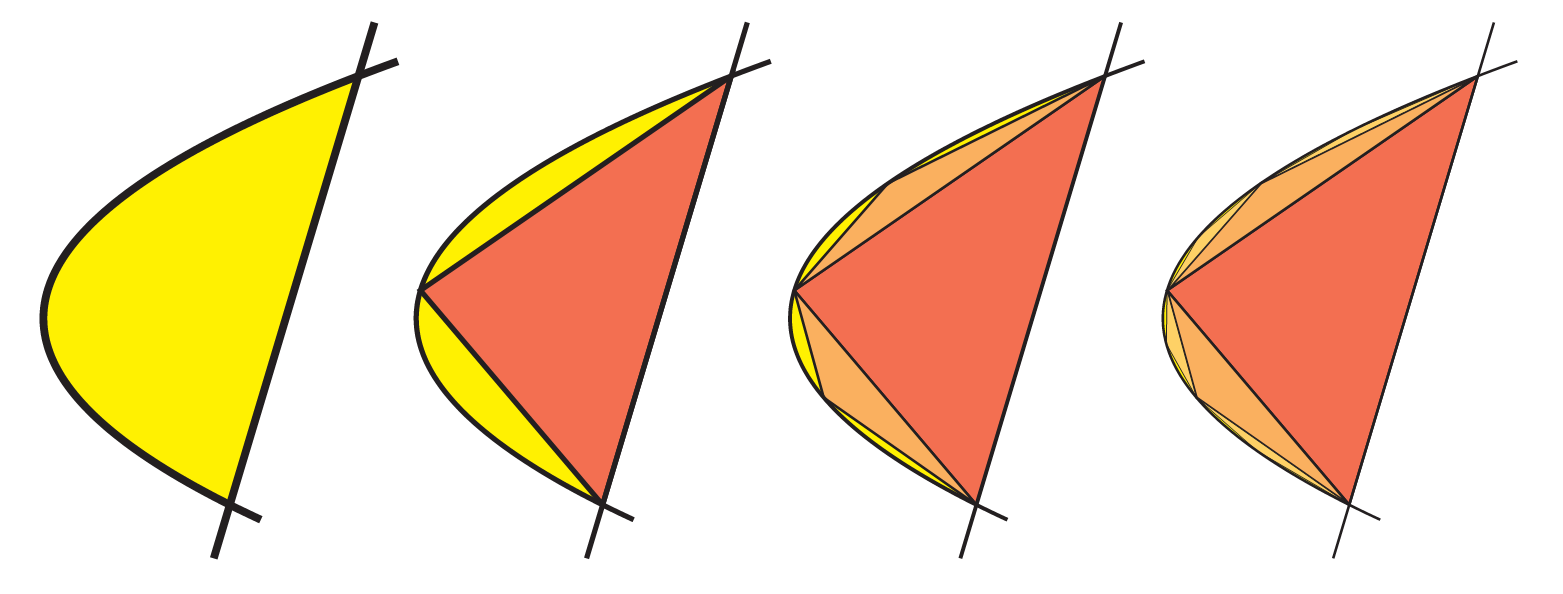

But why is it called geometric? Well (this is not the only reason, but…) Archimedes was the first human to sum such a series, and he did so completely geometrically. Ignoring the leading

He then notes that this is precisely one third the area of the bounding square, as two more identical copies of this sequence of squares fill it entirely (just slide our squares to the left, or down). Thus, this infinite sum is precisely

This tells us an important fact, beyond just the area of the parabola we sought! We were looking to compute the area of a curved shape, and the procedure we found could never give us the answer exactly, but only an infinite sequence of better approximations. Being acquainted with the work of Pythagoras and the Babylonians, this might have well led us to conjecture that the area of the parabola must be irrationally related to the area of the triangle. But Archimedes showed this is not the case; our infinite sum here evaluates to a rational number,

Infinite sequences of rational numbers can sometimes produce a wholly new number, and sometimes just converge to another rational.*

How can we tell? This is one motivating reason to develop a rigorous study of such objects. But it gets even more important, if we try to generalize Archimedes’ argument.

Troubles with Geometric Series

Archimedes’ quadrature of the parabola represents a monumental leap forward in human history. This is the first time in the mathematical literature where infinity is not treated as some distant ideal, but rather a real place that can be reached. And the argument itself is an absolute classic - involving the first occurrence of an infinite series in mathematics, and a wonderfully geometric summation method (hence the name geometric series, which survives until today). The elegance of Archimedes’ calculation is almost dangerous - its easy to be blinded by its apparent simplicity, and – like Icarus – fly too close to the sun, falling from these heights of logic directly into contradiction.

Archimedes visualized his argument for the sum

and note that multiplying

Thus,

Call that sum

We can then solve this for

This gives us what we expect when

Amazingly, it even works for negative numbers, after we think about what this means. If

Using our formula above we see that this is supposed to converge to

And, using a computer to add up the first 100 terms we see

This is pretty incredible, as our original geometric reasoning doesn’t make sense for

This is going off to infinity, and our formula gives

As we add this up term by term, we first have 1, then 0, then 1 then 0, over and over agan as we repeatedly add a 1, and then immediately cancel it out. This isn’t getting close to any number at all! But our formula gives

Now we have a real question - did we just discover a new, deep fact of mathematics - that we can sensibly assign values to series like this, that we weren’t originally concerned with, or did we discover a limitation of our theorem? This is an interesting, and important question to come out of our playing around!

Thus far, we haven’t seen any cases where our theorem has output any ‘obviously’ wrong answers, so we may be inclined to trust it. But this does not hold up to further scrutiny: what about when

which is clearly going to infinity. But our formula disagrees, as it would have you belive the sum is

Exercise 6 Explain what goes wrong with the argument when

The Circle Constant

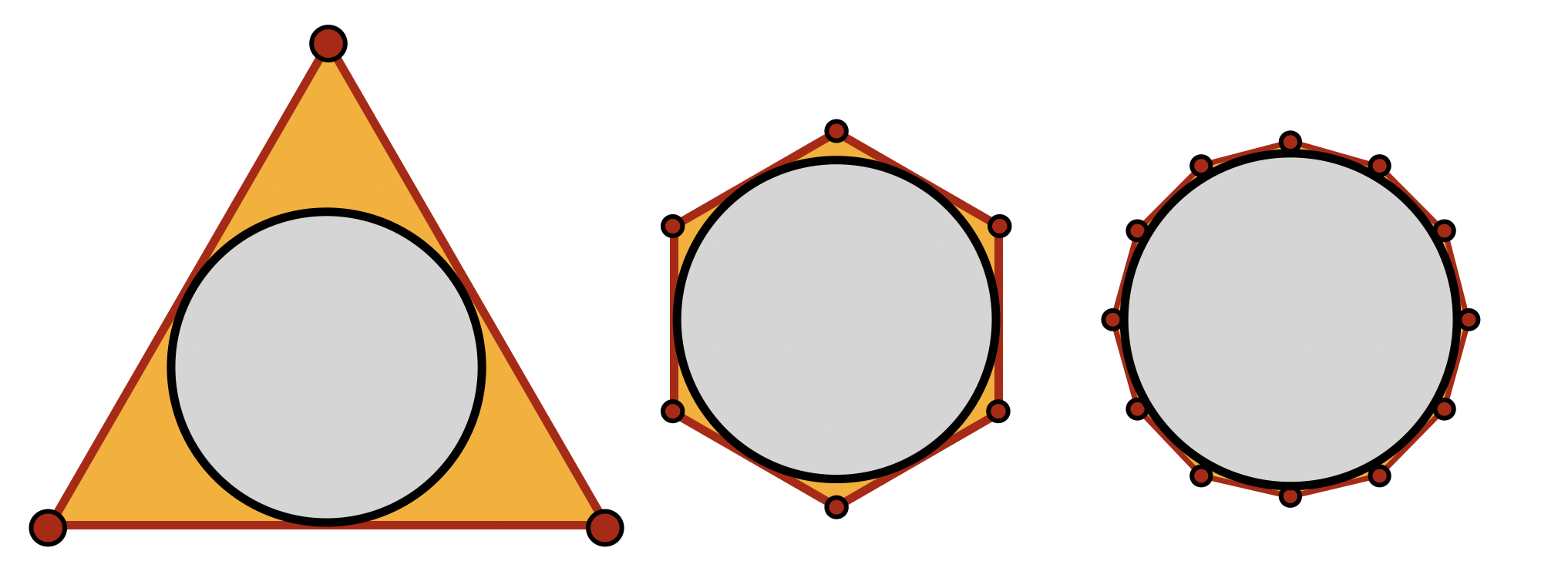

The curved shape that everyone was really interested in was not the parabola, but the circle. Archimedes tackles this in his paper The Measurement of the Circle, where he again constructs a finite sequence of approximations built from triangles, and then reasons about the circle out at infinity. First, we need a definition:

Definition 3 (

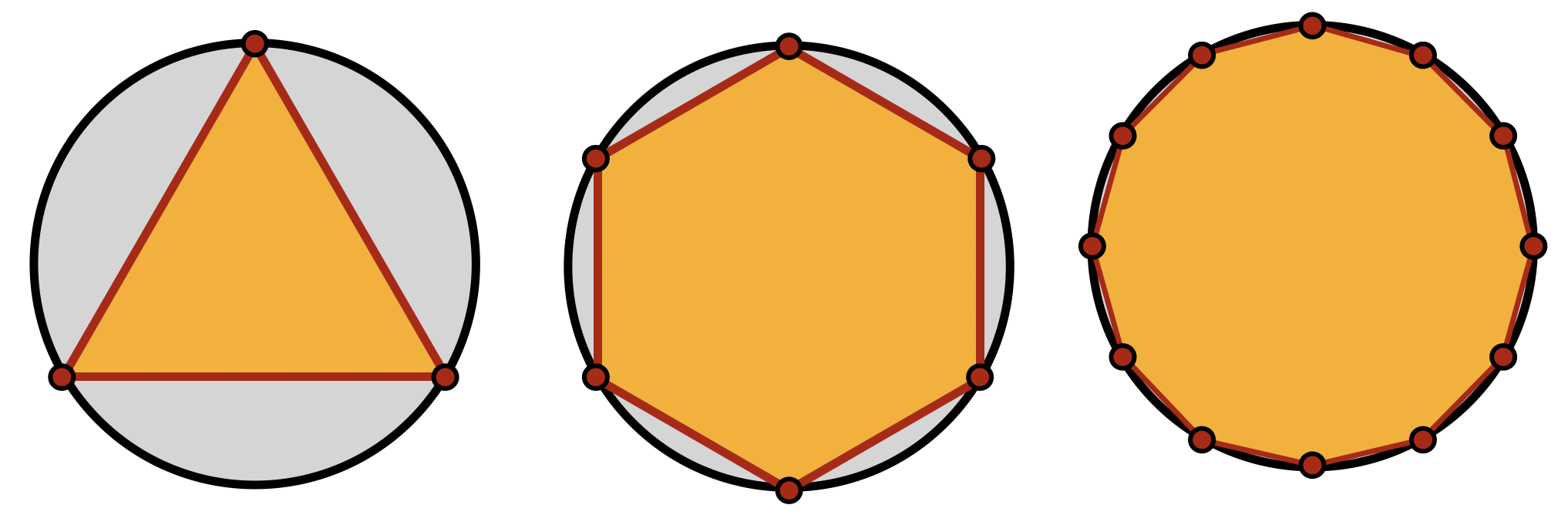

Archimedes came up with a sequence of overestimates, and underestimates for

Any polygon inside the unit circle gave an underestimate, and any polygon outside gave an overestimate. The more sides the polygon had, the better the approximations would be.

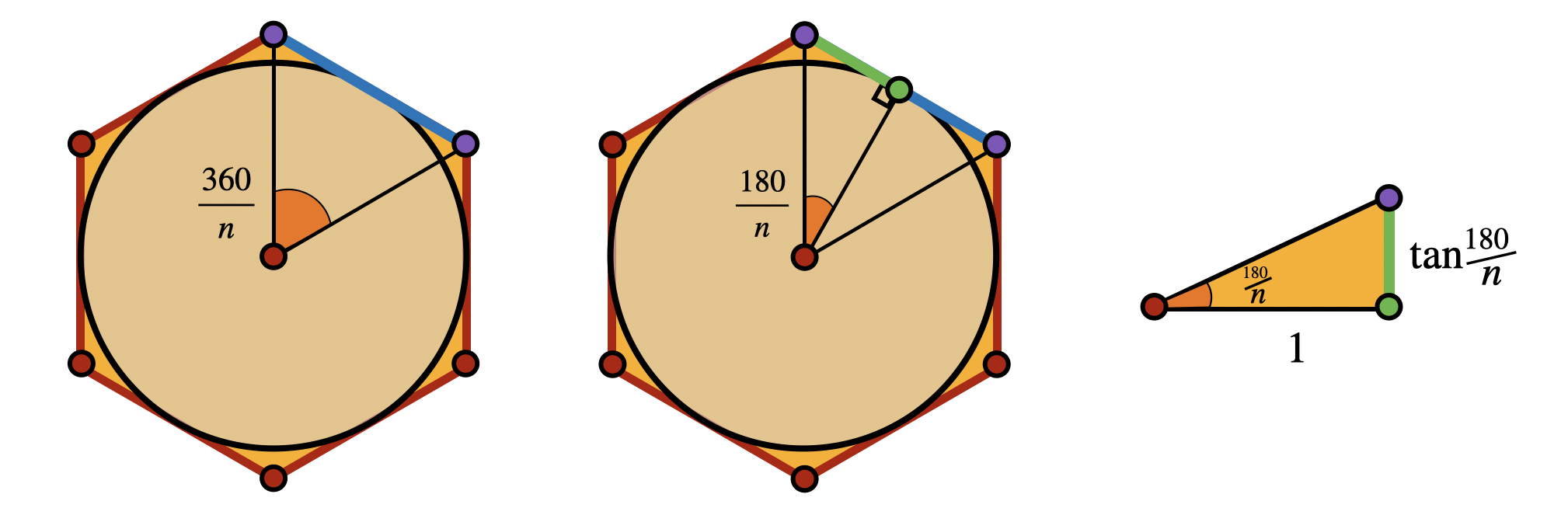

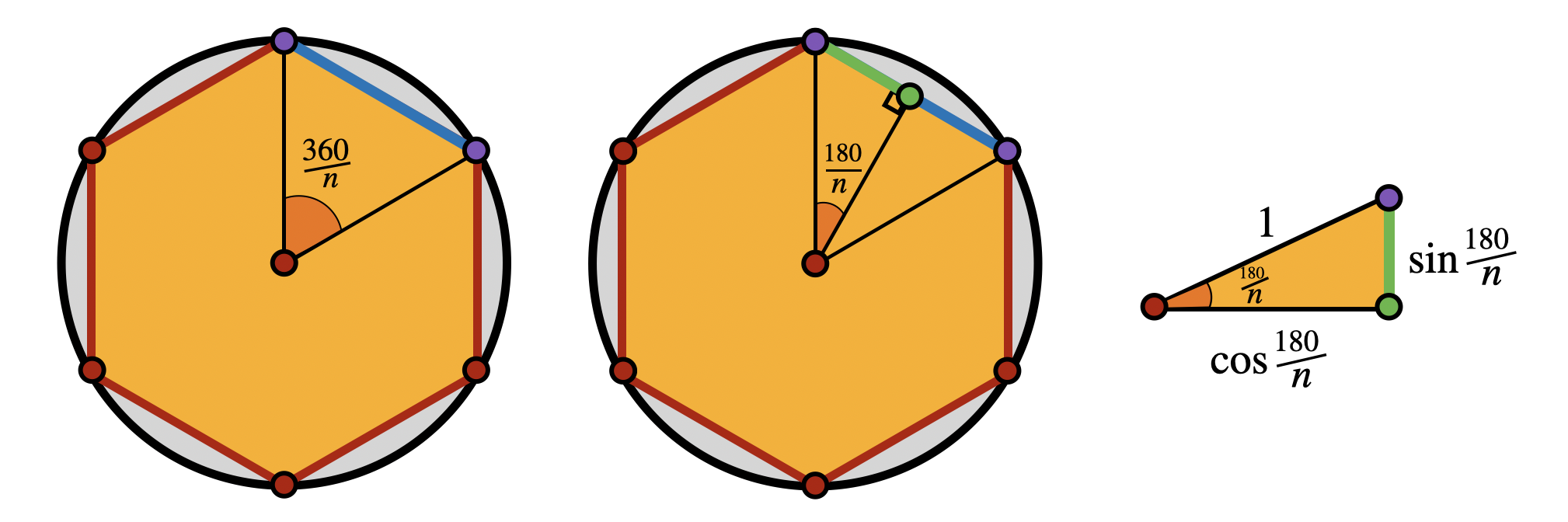

Calculating the area and perimeter of regular

Proposition 2 (Area of a Circumscribed Polygon) The area of a regular

Proposition 3 (Perimeter of a Circumscribed Polygon) The perimeter of a regular

Proposition 4 (Area of a Inscribed Polygon) The area of a regular

Where we used the trigonometric identity

Proposition 5 (Perimeter of a Inscribed Polygon) The perimeter of a regular

Using these, Archimedes calculated away all the way to the 96-gon, which provided him with the estimates

This was the best estimate of

Working with the

The lower bound here,

Remark 1. The next best rational approximation is

Proving

While impressive, Archimedes’ main goal was not the approximate calculation above, but rather an exact theorem. He wanted to understand the true relationship between the area and perimeter of the circle, and wished to use these approximations as a guide to what is happening with the real circle, “out at infinity”.

To understand this case, Archimedes argues that as

But, now look carefully at the form of the expressions we derived for the circumscribing polygons in Proposition 2 and Proposition 3:

Here, we do not need to worry about explicitly calculating

- Each polygon is built out of

- The area of a triangle is half its base times its height

- The height of each triangle is 1 (the radius of the circle)

- Thus, the area the sum of half all the bases, or half the perimeter!

But since this exact relationship holds for every single value of

Theorem 3 (Archimedes)

Troubles With Limits

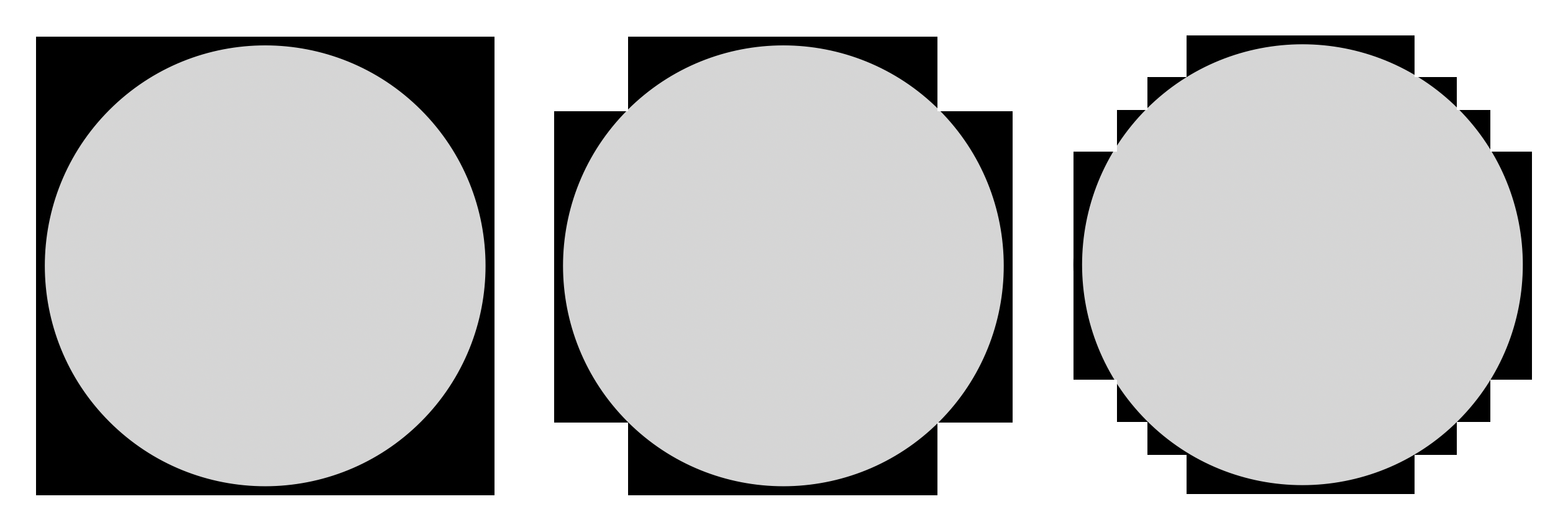

Archimedes again leaves us with an argument so elegant and deceptively simple that its easy to under-appreciate its subtlety and immediately fall prey to contradiction. What if we attempt to repeat Archimedes argument, but with a different sequence of polygons approaching the circle?

Remark 2. To be fair to the master, Archimedes is much, much more careful in his paper than I was above, so part of the apparent simplicity is a consequence of my omission.

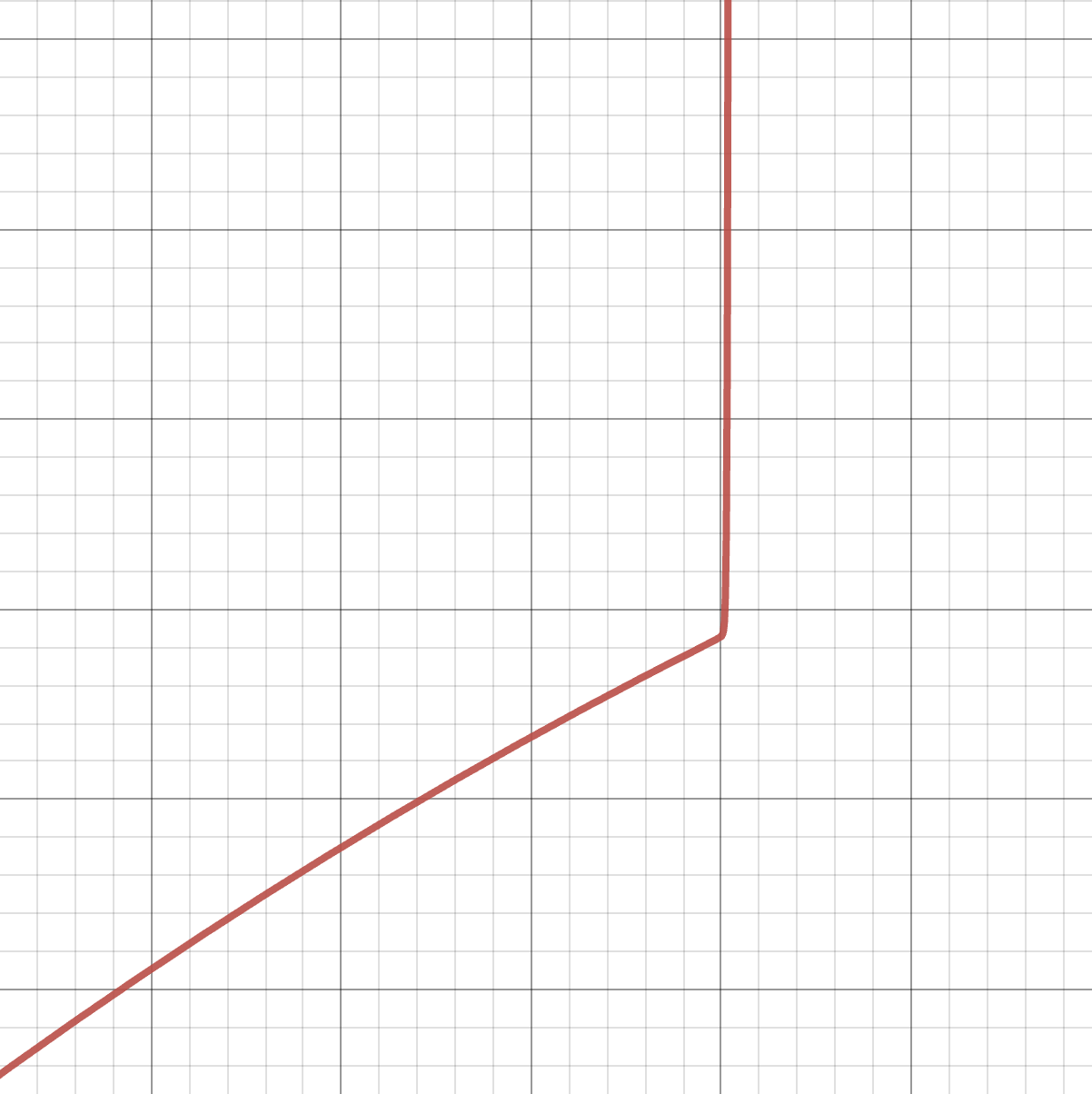

For example, what if we start with a square circumscribing the circle, and then at each stage produce a new polygon with the following rule:

- At each corner of the polygon, find the largest square that fits within the polygon, and remains outside the circle. Then remove this square.

Exactly like in Archimedes’ example this sequence of polygons approaches the circle as we repeat over and over. In fact, in the limit - this sequence literally becomes the circle (meaning that after infinitely many steps, there are no points of the resulting shape remaining outside the circle at all). Thus, just as for our original sequence of polygons, we expect that the areas and perimeters of these shapes approach the areas and perimeters of the circle itself. That is,

While the behavior of

Since adding a dent does not change the length of the perimeter, each polygon in our sequence has exactly the same perimeter as the original! The original perimeter is easy to calculate, each side of the square is a diameter of the unit circle, so its total perimeter is 8. But since this both does not change and converges in the limit to the circles circumference, we have just derived the amazing fact that

This is inconsistent with what we learn from Archimedes’ argument which shows that

Exercise 7 (Convergence to the Diagonal) We can run an argument analogous to the above which proves that

Prove that as

But the pythagorean theorem tells us that its length must be

Its quite difficult to pinpoint exactly what goes wrong here, and thus this presents a particularly strong argument for why we need analysis: without a rigorous understanding of infinite processes and limits, we can never be sure if our seemingly reasonable calculations give the right answers, or lies!

Estimating

With our modern access to calculator technology, the trigonometric formulas above essentially solves the problem: for example, plug in

But this poses a historical problem: of course the ancients did not have a calculator, so how did they compute such accurate approximations millennia ago? And there’s also a potential logical problem lurking in the background: inside our calculator there is some algorithm computing the trigonometric functions, and perhaps that algorithm depends on already knowing something about the value of

To compute their estimates, both Archimedes and Zu Chongzi landed on an idea similar to the Babylonians and their computation of

Exercise 8 (The Doublings of Zu Chongzi) How many times did Zu Chongzi double the sides of a hexagon to reach the 24,576 gon?

Following Archimedes, we’ll look at the doubling procedure for the perimeter of inscribed polygons: given

Definition 4 (Half Angle Identities)

Also making use of the pythagorean identity

Lets write

This sort of relationship is called a recurrence relation, or a recursively defined sequence as it tells us how to compute the next term in the sequence if we have the previous one. Notice there are no more trigonometric formulas in the recurrence - so if we can find the value

Example 2 (A Recurrence for

But, since

The incredible fact: even though we used trigonometry to derive this recurrence, we do not need to know how to evaluate any trigonometric functions to actually use it! All we need to be able to do is find the perimeter of some inscribed

But how can we get started? A beautiful observation of Archimedes was that a regular hexagon inscribed in the circle has perimeter exactly equal to 6, as it can be decomposed into six equilateral triangles, whose side length is the circle’s radius. And with that, we are off!

Example 3 (The Perimeter of an Inscribed 96-gon) Since

Using this, we know

Now doubling to the 48 gon,

One more doubling brings us to the 96-gon,

Numerically approximating this gives 6.282063901781019276222, which is more recognizable to us if we compute the half perimeter:

Exercise 9 Find a recurrence relation for the area

Exercise 10 Let

Hint: you’ll need some trig identities to write everything in terms of tangent! Use this to find a recurrence relation for

After all of this are still left with a fundamental question: what sort of number is

Convergence, Concern and Contradiction

Madhava, Leibniz &

Madhava was a Indian mathematician who discovered many infinite expressions for trigonometric functions in the 1300’s, results which today are known as Taylor Series after Brook Taylor, who worked with them in 1715. In a particularly important example, Madhava found a formula to calculate the arc length along a circle, in terms of the tangent: or phrased more geometrically, the arc of a circle contained in a triangle with base of length

The first term is the product of the given sine and radius of the desired arc divided by the cosine of the arc. The succeeding terms are obtained by a process of iteration when the first term is repeatedly multiplied by the square of the sine and divided by the square of the cosine. All the terms are then divided by the odd numbers 1, 3, 5, …. The arc is obtained by adding and subtracting respectively the terms of odd rank and those of even rank.

As an equation, this gives

If we take the arclength

This result was also derived by Leibniz (one of the founders of modern calcuous), using a method close to something you might see in Calculus II these days. It goes as follows: we know (say from the last chapter) the sum of the geometric series

Thus, substituting in

and the right hand side of this is the derivative of arctangent! So, anti-differentiating both sides of the equation yields

Finaly, we take this result and plug in

This argument is completely full of steps that should make us worried:

- Why can we substitute a variable into an infinite expression and ensure it remains valid?

- Why is the derivative of arctan a rational function?

- Why can we integrate an infinite expression?

- Why can we switch the order of taking an infinte sum, and integration?

- How do we know which values of

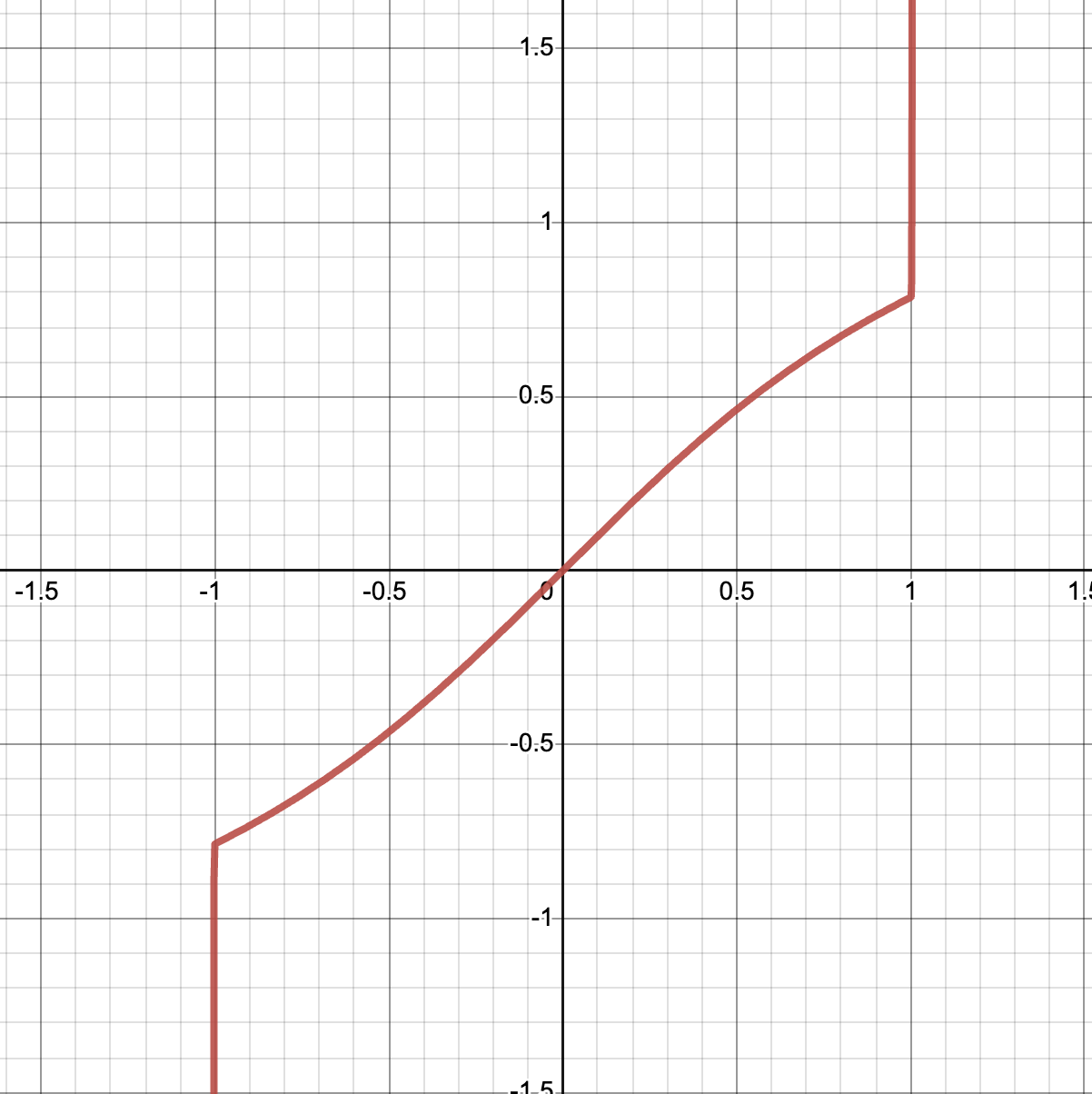

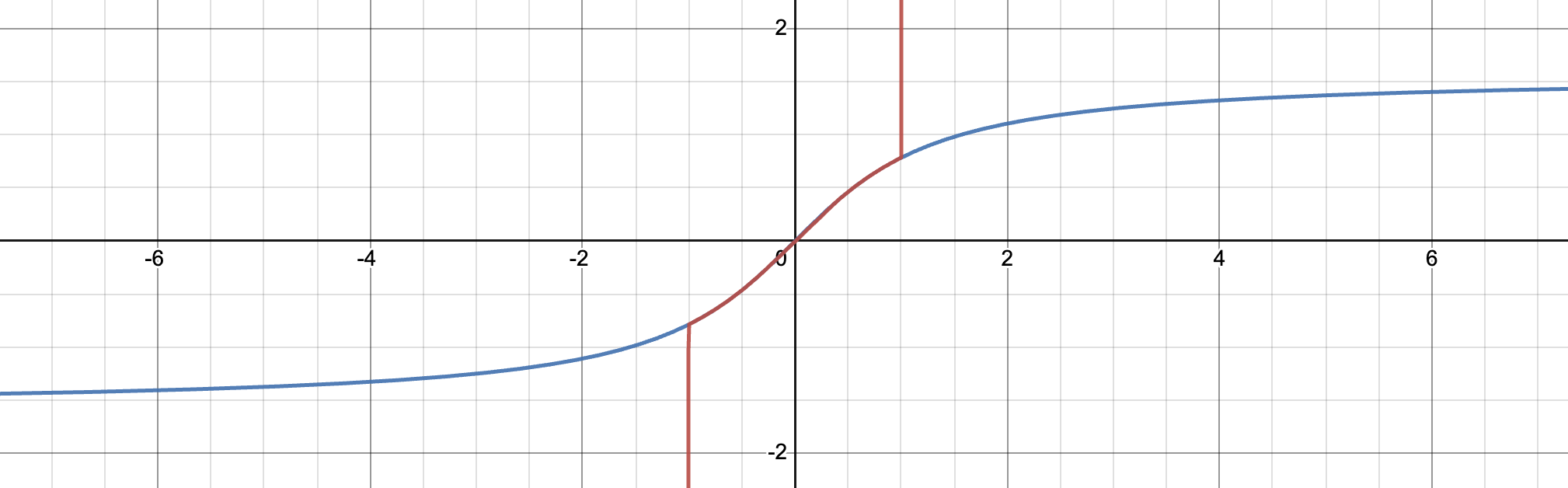

But beyond all of this, we should be even more worried if we try to plot the graphs of the partial sums of this supposed formula for the arctangent.

The infinite series we derived seems to match the arctangent exactly for a while, and then abruptly stop, and shoot off to infinity. Where does it stop? *Right at the point we are interested in,

And perhaps, before thinking the eventual answer might simply say the series always converges at the endpoints, it turns out at the other endpoint

Dirichlet &

In 1827, Dirichlet was studying the sums of infinitely many terms, thinking about the alternating harmonic series

Like the previous example, this series naturally emerges from manipulations in calculus: beginning once more with the geometric series

Finally, plugging in

What happens if we multiply both sides of this equation by

We can simplify this expression a bit, by re-ordering the terms to combine similar ones:

After simplifying, we’ve returned to exactly the same series we started with! That is, we’ve shown

What does this tell us? Well, the only difference between the two equations is the order in which we add the terms. And, we get different results! This reveals perhaps the most shocking discovery of all, in our time spent doing dubious computations: infinite addition is not always commutative, even though finite addition always is.

Here’s an even more dubious-looking example where we can prove that

Now, rewrite each of the zeroes as

Now, do some re-arranging to this:

Make sure to convince yourselves that all the same terms appear here after the rearrangement!

Simplifying this a bit shows a pattern:

Which, after removing the parentheses, is the familiar series

Infinite Expressions in Trigonometry

The sine function (along with the other trigonometric, exponential, and logarithmic functions) differs from the common functions of early mathematics (polynomials, rational functions and roots) in that it is defined not by a formula but geometrically.

Such a definition is difficult to work with if one actually wishes to compute: for example, Archimedes after much trouble managed to calculate the exact value of

One big question about this procedure is why in the world should this work? We found a function that

Infinite Product of Euler

One famous infinite expression for the sine function arose from thinking about the behavior of polynomials, and the relation of their formulas to their roots. As an example consider a quartic polynomial

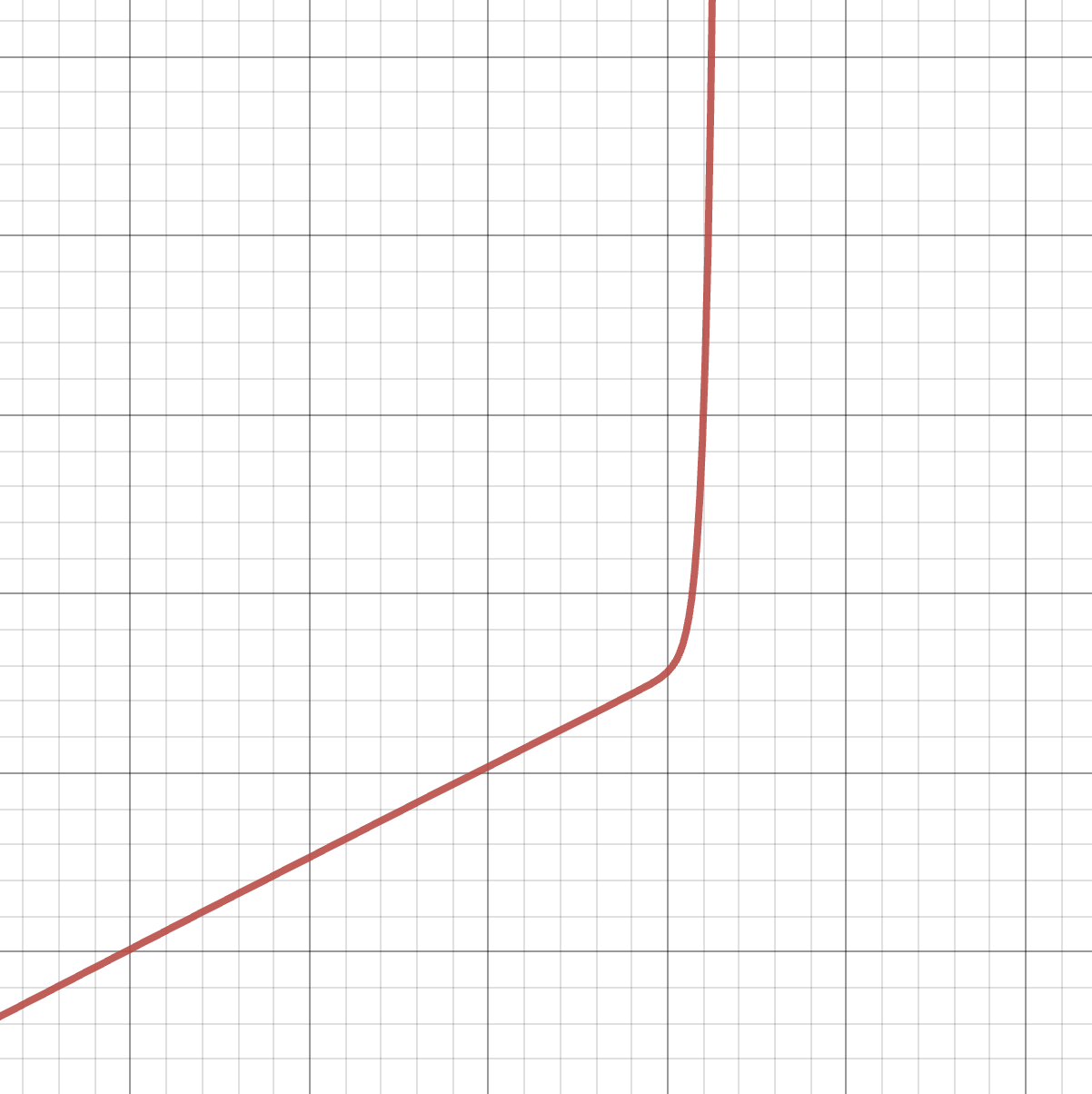

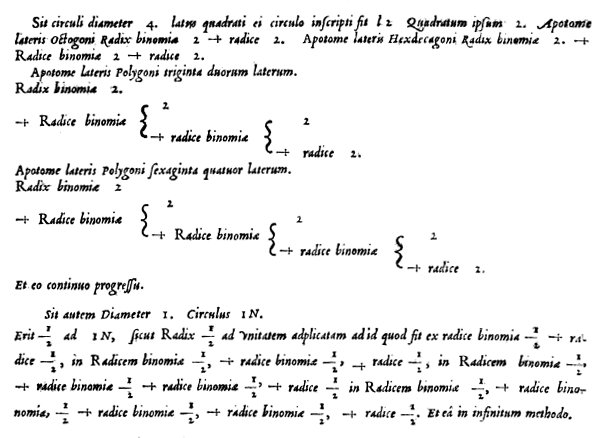

In 17334, Euler attempted to apply this same reasoning in the infinite case to the trigonometric function

GRAPH

Its roots agree with that of

Euler noticed all the factors come in pairs, each of which represented a difference of squares.

Not worrying about the fact that infinite multiplication may not be commutative (a worry we came to appreciate with Dirichlet, but this was after Euler’s time!), we may re-group this product pairing off terms like this, to yield

Finally, we may multiply back through by

Proposition 6 (Euler)

This incredible identity is actually correct: there’s only one problem - the argument itself is wrong!

Exercise 11 In his argument, Euler crucially uses that if we know

- all the zeroes of a function

- the value of that function is 1 at

then we can factor the function as an infinite polynomial in terms of its zeroes. This implies that a function is completely determined by its value at

Show that this is a serious flaw in Euler’s reasoning by finding a different function that has all the same zeroes as

Exercise 12 (The Wallis Product for

Using Euler’s infinite product for

The Basel Problem

The Italian mathematician Pietro Mengoli proposed the following problem in 1650:

Definition 5 (The Basel Problem) Find the exact value of the infinite sum

By directly computing the first several terms of this sum one can get an estimate of the value, for instance adding up the first 1,000 terms we find

so we might feel rather confident that the final answer is somewhat close to 1.64. But the interesting math problem isn’t to approximate the answer, but rather to figure out something exact, and knowing the first few decimals here isn’t of much help.

This problem was attempted by famous mathematicians across Europe over the next 80 years, but all failed. All until a relatively unknown 28 year old Swiss mathematician named Leonhard Euler published a solution in 1734, and immediately shot to fame. (In fact, this problem is named the Basel problem after Euler’s hometown.)

Proposition 7 (Euler)

Euler’s solution begins with two different expressions for the function

Because two polynomials are the same if and only if the coefficients of all their terms are equal, Euler attempts to generalize this to infinite expressions, and equate the coefficients for

From the series, we can again simply read off the coefficient as

Which quickly leads to a solution to the original problem, after multiplying by

Euler had done it! There are of course many dubious steps taken along the way in this argument, but calculating the numerical value,

We find it to be exactly the number the series is heading towards. This gave Euler the confidence to publish, and the rest is history.

But we analysis students should be looking for potential troubles in this argument. What are some that you see?

Viète’s Infinite Trigonometric Identity

Viete was a French mathematician in the mid 1500s, who wrote down for the first time in Europe, an exact expression for

Proposition 8 (Viète’s formula for

How could one derive such an incredible looking expression? One approach uses trigonometric identities…an infinite number of times! Start with the familiar function

Now we may apply the double angle identity once again to the term

and again

and again

And so on….after the

Viete realized that as

Proposition 9 (Viète’s Trigonometric Identity)

An incredible, infinite trigonometric identity! Of course, there’s a huge question about its derivation: are we absolutely sure we are justified in making the denominator there equal to

Now, we are left just to simplify the right hand side into something computable, using more trigonometric identities! We know

Substituting these all in gives the original product. And, while this derivation has a rather dubious step in it, the end result seems to be correct! Computing the first ten terms of this product on a computer yields

The Infinitesimal Calculus

In trying to formalize many of the above arguments, mathematicians needed to put the calculus steps on a firm footing. And this comes with a whole collection of its own issues. Arguments trying to explain in clear terms what a derivative or integral was really supposed to be often led to nonsensical steps, that cast doubt on the entire procedure. Indeed, the history of calculus is itself so full of confusion that it alone is often taken as the motivation to develop a rigorous study of analysis. Because we have already seen so many other troubles that come from the infinite, we will content ourselves with just one example here: what is a derivative?

The derivative is meant to measure the slope of the tangent line to a function. In words, this is not hard to describe. But like the sine function, this does not provide a means of computing, and we are looking for a formula. Approximate formulas are not hard to create: if

represents the slope of the secant line to

and then define the derivative as the infiniteth term in this sequence. But this is just incoherent, taken at face value. If

So, something else must be going on. One way out of this would be if our sequence of approximates did not actually converge to zero - maybe there were infinitely small nonzero numbers out there waiting to be discovered. Such hypothetical numbers were called infinitesimals.

Definition 6 (Infinitesimal) A positive number

This would resolve the problem as follows: if

But this leads to its own set of difficulties: its easy to see that if

Exercise 13 Prove this: if

So we can’t just say define the derivative by saying “choose some infinitesimal

Let’s attempt to differentiate

Here we see the derivative is not what we expected, but rather is

But this is not very sensible: when exactly are we allowed to do this? If we can discard an infinitesimal whenever its added to a finite number, shouldn’t we already have done so with the

So, the when we throw away the infinitesimal matters deeply to the answer we get! This does not seem right. How can we fix this? One approach that was suggested was to say that we cannot throw away infinitesimals, but that the square of an infinitesimal is so small that it is precisely zero: that way, we keep every infinitesimal but discard any higher powers. A number satisfying this property was called nilpotent as nil was another word for zero, and potency was an old term for powers (

Definition 7 A number

If our infinitesimals were nilpotent, that would solve the problem we ran into above. Now, the calculation for the derivative of

But, in trying to justify just this one calculation we’ve had to invent two new types of numbers that had never occurred previously in math: we need positive numbers smaller than any rational, and we also need them (or at least some of those numbers) to square to precisely zero. Do such numbers exist?